LLMs for Generating and Evaluating Counterfactuals (EMNLP’24)

“What minimal changes to this text would cause the text classifier to change its prediction?” Counterfactual texts, i.e. minimal changes to inputs that alter a model’s predictions, are an important technique in Explainable AI (XAI) for understanding model behaviour.

Bach and Paul evaluated the ability of open-source and closed-source LLMs (GPT-4, GPT-3.5, LLAMA-2, Mistral) to generate counterfactual texts in a variety of tasks (sentiment analysis, natural language inference, and hate speech detection).

Results in a nutshell:

- LLMs excel at generating fluent counterfactuals, but often make excessive changes.

- Generating counterfactuals for sentiment analysis is easier than for natural language inference and hate speech detection, where label reversal is less reliable.

- Human-generated counterfactuals outperform LLM-generated counterfactuals for data augmentation.

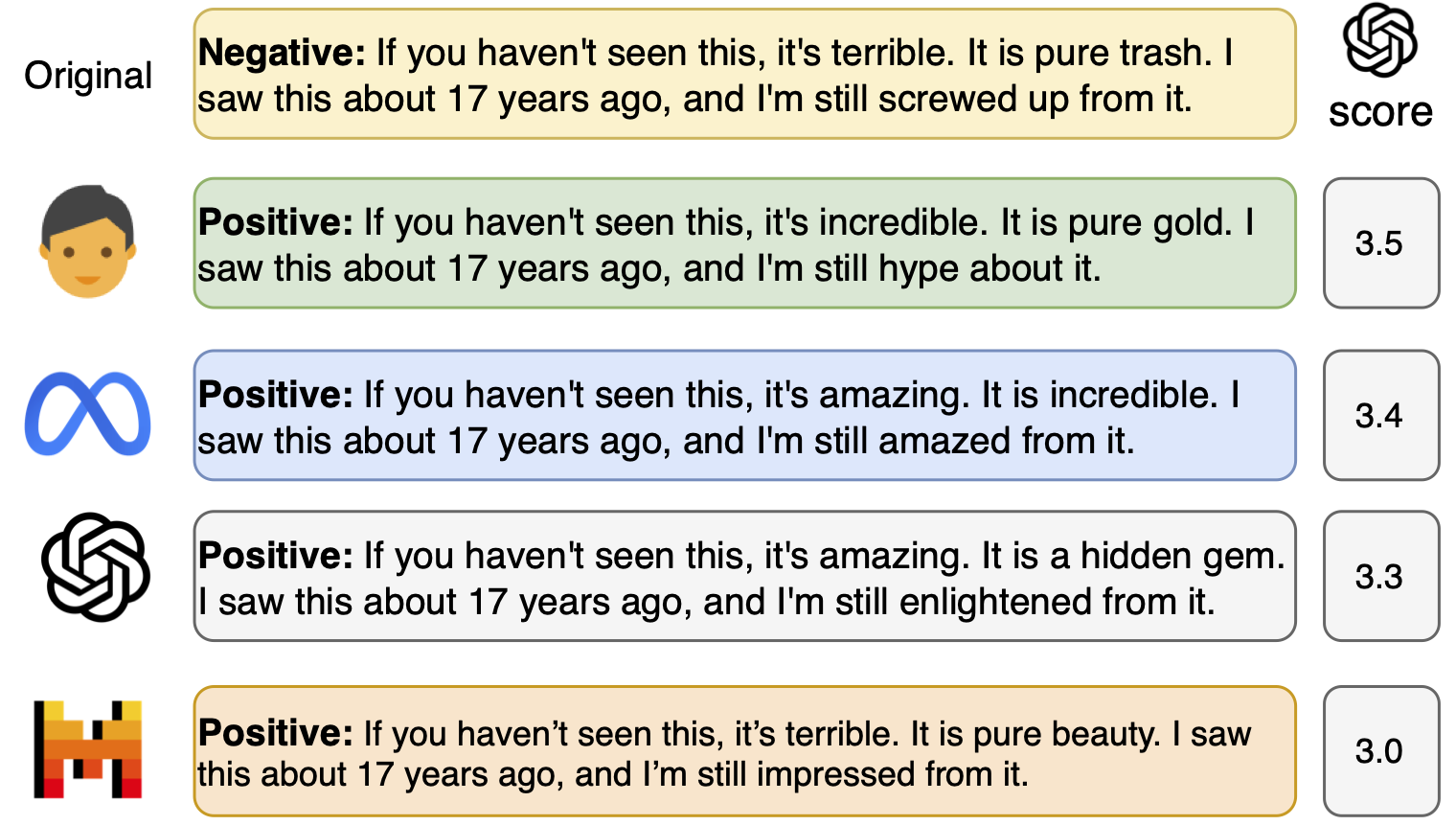

- Using LLMs to automatically assess the quality of generated counterfactuals is prone to bias: GPT-4 in particular tends to favour its own results.

Links

V. B. Nguyen, P. Youssef, C. Seifert, and J. Schlötterer, “LLMs for Generating and Evaluating Counterfactuals: A Comprehensive Study,” in Findings of the Association for Computational Linguistics: EMNLP 2024, Y. Al-Onaizan, M. Bansal, and Y.-N. Chen, Eds., Miami, Florida, USA: Association for Computational Linguistics, Nov. 2024, pp. 14809–14824. Available at: https://aclanthology.org/2024.findings-emnlp.870 PDF