LLMs for Counterfactuals (EMNLP’24)

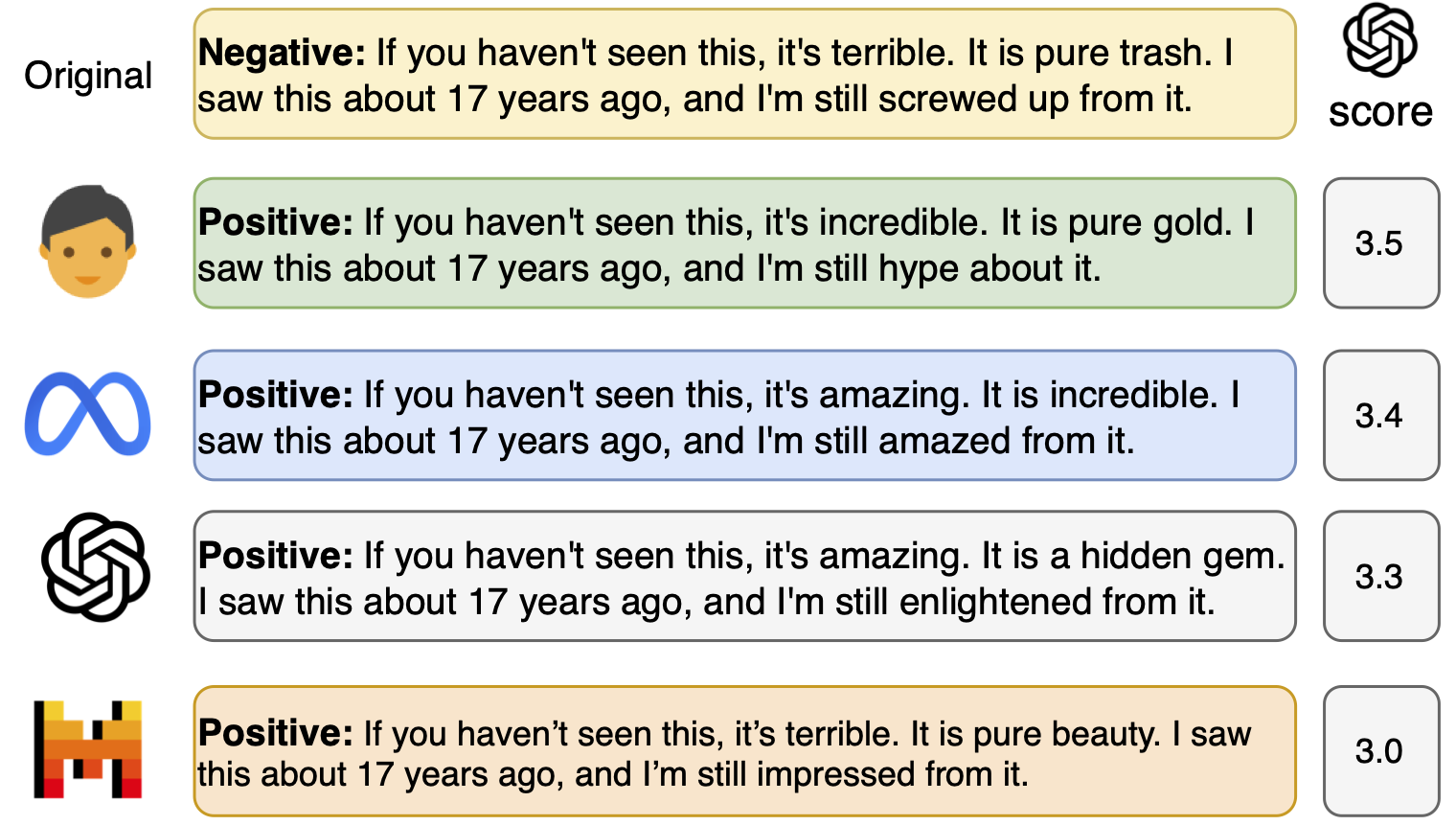

What minimal changes to this text would cause the text classifier to change its prediction?

Counterfactual texts, i.e. minimal changes to inputs that alter a model’s predictions, are an important technique in Explainable AI (XAI) for understanding model behaviour.

Bach and Paul evaluated the ability of open-source and closed-source LLMs (GPT-4, GPT-3.5, LLAMA-2, Mistral) to generate counterfactual texts in a variety of tasks (sentiment analysis, natural language inference, and hate speech detection).

Results in a nutshell:

- LLMs excel at generating fluent counterfactuals, but often make excessive changes.

- Generating counterfactuals for sentiment analysis is easier than for natural language inference and hate speech detection, where label reversal is less reliable.

- Human-generated counterfactuals outperform LLM-generated counterfactuals for data augmentation.

- Using LLMs to automatically assess the quality of generated counterfactuals is prone to bias: GPT-4 in particular tends to favour its own results.

Reference

-

Van Bach Nguyen, Paul Youssef, Christin Seifert, and Jörg Schlötterer.

LLMs for Generating and Evaluating Counterfactuals: A Comprehensive Study.

Findings of the Association for Computational Linguistics: EMNLP 2024.

2024.

BibTeX

@inproceedings{Nguyen2024_emnlp_llms-for-generating-counterfactuals, author = {Nguyen, Van Bach and Youssef, Paul and Seifert, Christin and Schl{\"o}tterer, J{\"o}rg}, booktitle = {Findings of the Association for Computational Linguistics: EMNLP 2024}, title = {{LLM}s for Generating and Evaluating Counterfactuals: A Comprehensive Study}, year = {2024}, address = {Miami, Florida, USA}, editor = {Al-Onaizan, Yaser and Bansal, Mohit and Chen, Yun-Nung}, month = nov, pages = {14809--14824}, publisher = {Association for Computational Linguistics}, code = {https://github.com/aix-group/llms-for-cfs/}, file = {:own-pdf/Nguyen2024_emnlp_llms-for-generating-counterfactuals_publisher.pdf:PDF}, url = {https://aclanthology.org/2024.findings-emnlp.870} }